Did you know that deep neural networks can process data with up to 90% accuracy in tasks like image recognition? This breakthrough has reshaped industries, from healthcare to finance. But how did we get here?

The journey began in 2006, when researchers like Geoffrey Hinton reignited interest in neural networks. Their work on multilayer perceptron networks and backpropagation techniques laid the foundation for modern AI. Today, these advancements power everything from voice assistants to self-driving cars.

What makes deep learning so revolutionary? It’s the ability to learn from vast amounts of data using multi-layer architectures. This mimics the human brain, enabling machines to solve complex problems with unprecedented precision.

As we explore the evolution of neural networks, we’ll uncover how historical milestones have shaped today’s Industry 4.0. Ready to dive deeper? Let’s embark on this innovative journey together.

Key Takeaways

- Deep neural networks achieve high accuracy in tasks like image recognition.

- Geoffrey Hinton’s 2006 work revived interest in neural networks.

- Multilayer perceptron networks and backpropagation are foundational techniques.

- Deep learning mimics the human brain to solve complex problems.

- Modern AI applications include voice assistants and self-driving cars.

Introduction to Deep Learning and AI

Artificial intelligence has transformed how we interact with technology. At its core, AI encompasses a range of techniques that enable machines to perform tasks requiring human-like intelligence. One of its most powerful subsets is deep learning, which builds on decades of research in machine learning.

Early learning algorithms were simple, often limited to single-layer networks. Over time, these evolved into multi-layer architectures capable of handling complex data. This shift marked the beginning of modern AI systems, which now power everything from voice assistants to self-driving cars.

Today’s AI builds on foundational breakthroughs in neural networks. Researchers like Geoffrey Hinton pioneered techniques such as backpropagation, enabling machines to learn from vast datasets. These advancements have made AI more accurate and versatile than ever before.

Deep learning’s strength lies in its ability to mimic the human brain. By processing data through multiple layers, it can identify patterns and solve problems with remarkable precision. This has opened doors to innovations across industries, from healthcare to finance.

| Key Concept |

Description |

| Machine Learning |

A subset of AI that focuses on algorithms learning from data. |

| Learning Algorithm |

Methods used by machines to improve performance on tasks. |

| Artificial Intelligence |

The broader field of creating intelligent machines. |

As we explore AI’s evolution, it’s clear that deep learning is a cornerstone of modern technology. Its ability to learn and adapt continues to drive innovation, making it an essential tool for the future.

Unlocking AI Conversations with DeepSeek Chat

Imagine having a conversation with AI that feels as natural as chatting with a friend. DeepSeek Chat makes this possible, offering a state-of-the-art solution that harnesses the power of deep neural networks to create engaging and intuitive interactions. Whether you’re seeking instant answers, brainstorming ideas, or need virtual assistance, this tool is designed to transform how you communicate.

Features of DeepSeek Chat

What sets DeepSeek Chat apart? Here are some standout features:

- 24/7 Availability: Access support and insights anytime, anywhere.

- User-Friendly Interface: Designed for ease of use, even for beginners.

- Natural Language Processing: Understands and responds to queries with human-like accuracy.

These features make it a versatile tool for both personal and professional use.

Transforming Communication with AI

AI-driven conversations are no longer a thing of the future. With DeepSeek Chat, interactions are smarter, faster, and more efficient. It leverages natural language processing to understand context, tone, and intent, delivering responses that feel genuinely human.

“The ability to communicate seamlessly with AI is revolutionizing industries, from customer service to creative collaboration.”

Real-world applications include:

| Use Case |

Benefit |

| Customer Support |

Instant, accurate responses to queries. |

| Creative Brainstorming |

Generates ideas and solutions in real-time. |

| Virtual Assistance |

Handles tasks and schedules effortlessly. |

Ready to experience the future of communication? Unlock the Power of AI Conversations Today! Visit DeepSeek Chat and discover how it can enhance your interactions.

The Evolution and History of Deep Learning

From humble beginnings in the 1980s, neural networks have revolutionized AI. Early research focused on simple architectures, but breakthroughs like backpropagation paved the way for more complex systems. These advancements laid the groundwork for modern applications, from speech recognition to image processing.

One of the most significant milestones was the introduction of deep belief networks in the 2000s. Researchers like Geoffrey Hinton demonstrated how multi-layer architectures could process vast amounts of datum with remarkable accuracy. This innovation transformed industries, enabling machines to tackle tasks once thought impossible.

Milestones from the 1980s to Today

The 1980s marked the rise of neural networks as a viable approach to AI. Key developments included:

- The popularization of backpropagation, enabling efficient training of multi-layer networks.

- The creation of early models for speech recognition, setting the stage for modern voice assistants.

- The introduction of convolutional neural networks, which revolutionized image processing.

By the 2000s, advancements in hardware and algorithms accelerated progress. The ability to process large datum sets allowed neural networks to achieve unprecedented accuracy. Today, these technologies power everything from self-driving cars to personalized recommendations.

As we look to the future, the evolution of neural networks continues to inspire innovation. From their roots in the 1980s to their current applications, these systems have reshaped how we interact with technology. The journey is far from over, and the possibilities are endless.

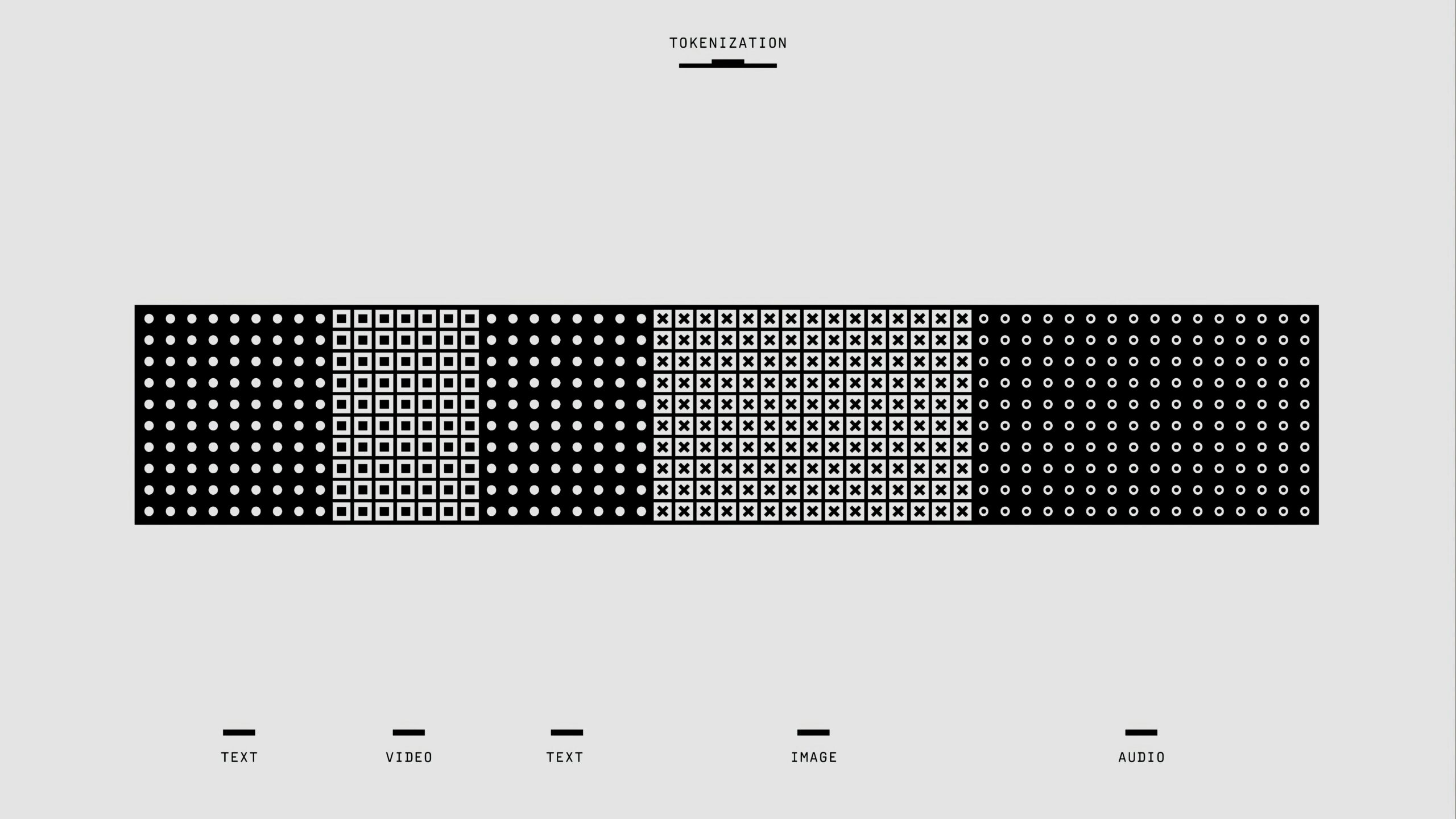

Deep Learning is a specialized branch of machine learning that employs artificia

The rise of multi-layer architectures has redefined how machines process information. While machine learning focuses on algorithms that learn from data, deep learning takes this a step further. It uses multiple layers to extract higher-order features, enabling more complex data representations.

What makes this approach unique? It’s the ability to mimic the human brain’s structure. Each layer in a neural network processes data at a different level of abstraction. This allows the system to identify patterns and solve problems with remarkable precision.

Historical advancements like convolutional neural networks and modern models such as transformers showcase this specialization. These innovations have transformed industries, from healthcare to finance, by enabling machines to handle tasks once thought impossible.

Why does this matter? The ability to process vast amounts of data through multiple layers drives innovation in the machine industry. It’s a cornerstone of modern AI, paving the way for smarter, more efficient systems.

| Key Concept |

Description |

| Machine Learning |

Algorithms that learn from data to improve performance. |

| Deep Learning |

Uses multi-layer architectures for complex data processing. |

| Neural Network Layers |

Each layer extracts higher-order features from input data. |

As we continue to explore the potential of deep learning, its impact on technology and industry grows. These advancements are not just innovations—they’re the foundation of a smarter future.

Fundamentals of Neural Networks and Deep Neural Networks

Understanding neural networks is key to unlocking the potential of artificial intelligence. These systems, inspired by the human brain, form the foundation of modern AI. By mimicking how neurons process information, they enable machines to learn and adapt.

At their core, neural networks consist of layers of interconnected nodes. Each node processes data using activations, weights, and biases. These components work together to transform input data into meaningful outputs.

Basic Principles of Neural Processing

Neural networks rely on a series of steps to process information. First, input data is fed into the network. Each layer applies weights and biases to the data, adjusting its importance. Activations then determine how much of this processed data is passed to the next layer.

This process is powered by algorithms like backpropagation. Backpropagation adjusts weights and biases based on errors, improving the network’s accuracy over time. It’s a cornerstone of modern AI systems.

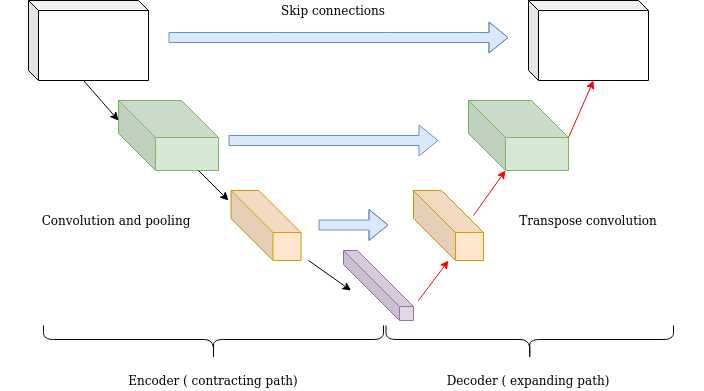

From Shallow to Deep Architectures

Early neural networks used shallow architectures with only a few layers. While effective for simple tasks, they struggled with complex data. The shift to deep architectures changed this.

Deep neural networks use multiple layers to extract higher-order features. This allows them to handle tasks like image recognition with remarkable precision. For example, convolutional neural networks excel at identifying patterns in visual data.

Here’s how they differ:

- Shallow Networks: Limited layers, ideal for basic tasks.

- Deep Networks: Multiple layers, capable of solving complex problems.

These advancements have expanded the application of neural networks across industries. From healthcare to finance, they’re driving innovation and efficiency.

By understanding these fundamentals, we can better appreciate the power of neural networks. They’re not just tools—they’re the building blocks of a smarter future.

Exploring Deep Neural Networks Architectures

Modern technology thrives on the power of neural architectures. These designs form the foundation of intelligent systems, enabling machines to perform complex tasks with remarkable precision. From image recognition to language processing, the right architecture can make all the difference.

Overview of Popular Models

Several models dominate the field of neural networks. Convolutional Neural Networks (CNNs) excel in image and video processing. Recurrent Neural Networks (RNNs) are ideal for sequential data like text and speech. Transformers, on the other hand, have revolutionized natural language processing with their attention mechanisms.

Each model has a unique structure tailored to specific tasks. CNNs use convolutional layers to detect patterns in visual data. RNNs rely on loops to process sequences, while transformers use self-attention to understand context in text.

Comparative Advantages and Limitations

When choosing a model, it’s essential to weigh its strengths and weaknesses. CNNs are highly accurate for image-related tasks but require significant computational resources. RNNs handle sequential data well but struggle with long-term dependencies. Transformers offer unparalleled accuracy in language tasks but demand large datasets and processing power.

Here’s a quick comparison:

| Model |

Strengths |

Limitations |

| CNNs |

High accuracy in image processing |

Computationally intensive |

| RNNs |

Effective for sequential data |

Struggles with long-term dependencies |

| Transformers |

Superior in language tasks |

Requires large datasets and resources |

Understanding these trade-offs helps in selecting the right system for the task at hand. Whether processing visual input or analyzing text, the choice of architecture directly impacts performance and efficiency.

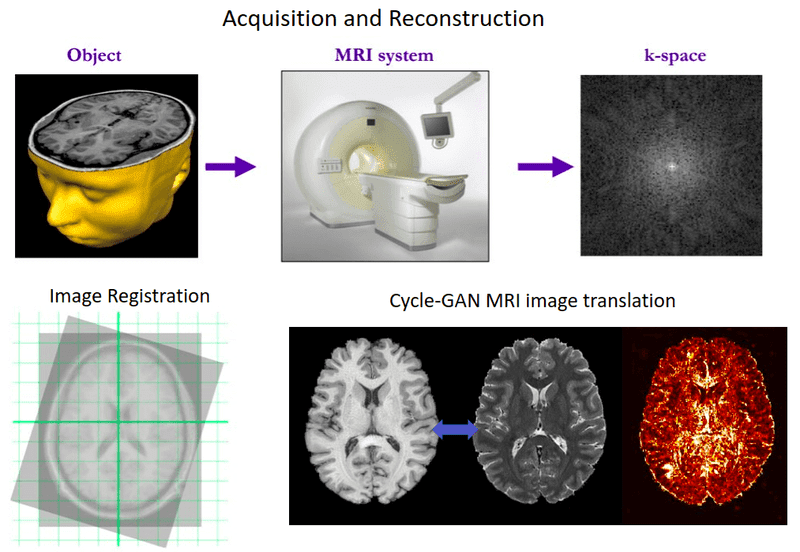

Understanding Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) have become a cornerstone of modern image recognition. These systems are designed to process visual data, transforming input images into meaningful classifications. Their ability to identify patterns and features makes them indispensable in computer vision.

Key Concepts in CNNs

At the heart of CNNs are convolution layers. These layers apply filters to the input image, detecting edges, textures, and other features. The process mimics how the human brain processes visual information, making CNNs highly effective for tasks like object detection.

Another critical component is the pooling layer. This layer reduces the spatial size of the data, focusing on the most important features. It helps improve efficiency and reduces the risk of overfitting.

Here’s how CNNs work step-by-step:

- Input Layer: Receives the raw image data.

- Convolution Layer: Applies filters to detect features.

- Pooling Layer: Downsizes the data to focus on key features.

- Fully Connected Layer: Produces the final classification output.

Applications in Computer Vision

CNNs have revolutionized various fields through their applications in computer vision. One of the most notable uses is in facial recognition, where they identify and verify individuals with high accuracy. This technology is widely used in security systems and social media platforms.

Another significant application is in automated inspections. CNNs can analyze images from manufacturing lines to detect defects or anomalies. This ensures quality control and reduces human error.

Here are some other areas where CNNs are making an impact:

- Surveillance: Monitoring and analyzing video feeds in real-time.

- Augmented Reality: Enhancing user experiences by overlaying digital information on real-world images.

- Medical Imaging: Assisting in diagnosing diseases through image analysis.

By leveraging CNNs, industries are achieving higher efficiency and accuracy in visual tasks. Their ability to process complex data makes them a vital tool in the modern technological landscape.

Harnessing Recurrent Neural Networks (RNNs) for Sequential Data

Recurrent Neural Networks (RNNs) are revolutionizing how machines handle time-series information. Unlike traditional artificial neural networks, RNNs excel at processing sequential data like speech, text, and time-series inputs. Their unique memory capabilities make them ideal for tasks requiring context and continuity.

Memory and Sequence Modeling

RNNs are designed to remember past inputs, making them perfect for sequence modeling. Each step in the network retains information from previous steps, allowing it to understand context. This feature is crucial for tasks like language translation, where word order matters.

For example, in speech recognition, RNNs analyze audio signals over time. They capture patterns in pronunciation and intonation, delivering accurate transcriptions. This ability to process data sequentially sets RNNs apart from other models.

Overcoming Training Challenges

Despite their strengths, RNNs face challenges like vanishing gradients. This issue occurs when the network struggles to learn from earlier steps in long sequences. Modern techniques, such as Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs), address this by improving memory retention.

These advancements have made RNNs more efficient and reliable. They now power applications like predictive text, chatbots, and even financial forecasting. By overcoming these hurdles, RNNs continue to push the boundaries of AI.

Here’s how RNNs are transforming industries:

- Speech Recognition: Enables accurate voice-to-text conversion for virtual assistants.

- Natural Language Processing: Powers chatbots and language translation tools.

- Time-Series Analysis: Predicts trends in stock markets and weather patterns.

RNNs are a testament to the power of artificial neural networks. Their ability to process sequential data with memory and context is reshaping how we interact with technology. From speech to text, these networks are unlocking new possibilities in AI.

Generative Models: VAEs, GANs, and Diffusion Models

Generative models are reshaping how machines create and innovate. These systems excel at producing synthetic data, from realistic images to coherent text. By learning patterns from existing datasets, they unlock new possibilities in artificial intelligence.

At their core, generative models use advanced algorithms to mimic real-world data. They’ve evolved from simple autoencoders to sophisticated systems like VAEs, GANs, and diffusion models. Each approach has unique strengths, making them suitable for diverse applications.

Innovations and Use Cases in Generative AI

Generative models have revolutionized industries by enabling creativity and efficiency. For example, GANs can generate photorealistic images, while VAEs are ideal for data compression. Diffusion models, on the other hand, excel at producing high-quality outputs from noisy inputs.

Here’s a comparison of these models:

- VAEs: Focus on data compression and reconstruction, often used in anomaly detection.

- GANs: Generate realistic images, videos, and audio, transforming creative industries.

- Diffusion Models: Produce high-quality outputs by reversing noise, ideal for image synthesis.

Recent research breakthroughs have enhanced their capabilities. For instance, GANs now create art indistinguishable from human-made works. Diffusion models are pushing boundaries in medical imaging, generating detailed scans for diagnosis.

These models also play a crucial role in data augmentation. By creating synthetic datasets, they improve the performance of AI systems over time. This adaptability makes them essential for tasks like fraud detection and personalized recommendations.

In creative industries, generative models are transforming workflows. Designers use them to prototype ideas, while filmmakers leverage them for visual effects. Their ability to generate content quickly and accurately is unmatched.

As AI continues to evolve, generative models will drive innovation. They’re not just tools—they’re catalysts for progress, making intelligence more versatile and impactful.

Transformers: Revolutionizing Language and Vision

Imagine a world where machines understand language and images as effortlessly as humans. Transformers have made this a reality, redefining how AI systems process and interpret complex data. Unlike traditional models, transformers excel at handling both sequential and visual information, making them a cornerstone of modern AI.

Architecture and Functionality

At the heart of transformers lies their innovative encoder-decoder structure. This design allows them to process data in parallel, significantly speeding up training. Unlike older models that rely on sequential processing, transformers can analyze entire datasets at once, making them highly efficient.

Another key feature is their use of attention mechanisms. These mechanisms enable transformers to focus on the most relevant parts of the input, whether it’s a sentence or an image. This approach minimizes human intervention while maximizing accuracy, making transformers ideal for solving complex problems.

Applications in Language and Vision

Transformers have transformed how we approach language and vision tasks. In language processing, they power tools like machine translation and summarization. For example, models like GPT and BERT use transformer architecture to generate coherent and contextually accurate text.

In computer vision, transformers are equally groundbreaking. They’re used in tasks like image classification and object detection, where their ability to process large datasets quickly is invaluable. This versatility has made transformers a go-to solution for industries ranging from healthcare to entertainment.

- Parallel Processing: Speeds up training by analyzing data simultaneously.

- Attention Mechanisms: Focus on relevant parts of the input for better accuracy.

- Versatility: Effective in both language and vision tasks.

By combining efficiency with precision, transformers have set a new standard in AI. Their ability to handle sophisticated prediction challenges with minimal human intervention is reshaping industries and driving innovation. Whether it’s translating languages or analyzing images, transformers are at the forefront of AI’s evolution.

Applications of Deep Learning in Industry and Research

From healthcare to cybersecurity, deep learning is driving innovation across sectors. Its ability to process vast amounts of data with precision has transformed industries, enabling solutions once thought impossible. Let’s explore how this technology is reshaping the world.

Impact in Healthcare

In healthcare, deep learning is revolutionizing diagnosis and treatment. For example, it powers systems that analyze medical images to detect diseases like cancer with high accuracy. These advancements reduce human error and improve patient outcomes.

Another key function is in drug discovery. By analyzing molecular structures, deep learning accelerates the development of new medications. This not only saves time but also reduces costs, making treatments more accessible.

Advancements in Cybersecurity

Cybersecurity benefits greatly from deep learning’s ability to detect threats in real-time. For instance, it identifies unusual patterns in network traffic, flagging potential attacks before they cause harm. This proactive approach enhances digital security across industries.

Another example is in fraud detection. Deep learning algorithms analyze transaction data to spot suspicious activities, protecting businesses and consumers alike. These systems adapt over time, becoming more effective with each use.

Natural Language and Computer Vision

Deep learning excels in natural language processing, enabling machines to understand and generate human-like text. This powers tools like chatbots and language translation, making communication more seamless.

In computer vision, it’s used for tasks like facial recognition and object detection. For example, self-driving cars rely on deep learning to navigate roads safely. These applications are transforming industries from transportation to retail.

Academic Research and Industrial Innovations

Academic research and industrial applications are mutually reinforcing progress in deep learning. Universities develop cutting-edge algorithms, while industries implement them in real-world scenarios. This collaboration drives continuous improvement and innovation.

For example, research in natural language processing has led to advanced virtual assistants. These tools are now widely used in customer service, enhancing user experiences and operational efficiency.

| Industry |

Application |

Benefit |

| Healthcare |

Medical Imaging |

Improved diagnosis accuracy |

| Cybersecurity |

Threat Detection |

Real-time protection |

| Retail |

Customer Service Chatbots |

Enhanced user experience |

| Transportation |

Self-Driving Cars |

Safer navigation |

Deep learning’s versatility is unlocking new possibilities across industries. From healthcare to cybersecurity, its applications are transforming how we live and work. As research and innovation continue, the potential for this technology is limitless.

Hardware and Data Dependencies in Deep Learning

The backbone of any successful AI system lies in its hardware and data foundation. Without robust infrastructure and high-quality data, even the most advanced algorithms struggle to deliver accurate results. Let’s explore the critical role of GPUs, computational power, and data quality in training efficient models.

GPU Utilization and Computational Needs

High-performance GPUs are essential for processing large-scale models. These processors handle complex matrix and tensor operations, speeding up training times significantly. Without them, tasks like image recognition or language processing would take exponentially longer.

Robust hardware also ensures efficient input-output processes. Faster data transfer between components reduces bottlenecks, allowing models to train more effectively. This balance between computational power and speed is crucial for achieving optimal performance.

Data Volume and Quality Considerations

The accuracy of a model’s prediction depends heavily on the volume and quality of its training data. Larger datasets provide more examples for the system to learn from, improving its ability to generalize. However, data quality is equally important—clean, well-labeled data ensures the model learns the right patterns.

Automated tools can help preprocess data, removing noise and inconsistencies. This step is vital for maintaining the integrity of the training process. By focusing on both quantity and quality, we can build models that deliver reliable results.

“The right hardware and data can make or break a deep learning project. Investing in both is non-negotiable for success.”

Here’s a quick comparison of hardware and data requirements:

| Component |

Role |

| GPUs |

Accelerate complex computations |

| Data Volume |

Provides diverse examples for training |

| Data Quality |

Ensures accurate pattern recognition |

By understanding these dependencies, we can build systems that leverage the full potential of deep learning. Whether it’s optimizing hardware or refining datasets, every detail matters in achieving groundbreaking results.

Optimizing Training: From Backpropagation to Modern Techniques

Training neural networks has come a long way since the early days of backpropagation. This foundational algorithm, introduced in the 1980s, revolutionized how machines learn by adjusting weights based on errors. Over time, advancements in processing power and innovative techniques have transformed training methods, making them faster and more efficient.

Key Training Algorithms and Their Evolution

Backpropagation was the first major breakthrough in training neural networks. It works by calculating the gradient of the loss function and adjusting weights to minimize errors. While effective, it faced challenges like vanishing gradients, which slowed learning in deep networks.

Modern techniques have addressed these issues. For example, language models like GPT use advanced optimization methods such as Adam and RMSprop. These algorithms adapt learning rates dynamically, improving convergence and reducing training time.

Here’s how training methods have evolved:

- Backpropagation: The foundation of neural network training, adjusting weights based on error gradients.

- Gradient Descent Variants: Techniques like Adam and RMSprop optimize learning rates for faster convergence.

- Batch Normalization: Stabilizes training by normalizing inputs, reducing overfitting.

These advancements have streamlined model development, enabling faster deployment and better performance. For instance, modern processing hardware like GPUs accelerates training, making it feasible to handle massive datasets.

Decision-Making in Training Frameworks

Training frameworks have also evolved to improve decision-making. Early systems relied on manual tuning of hyperparameters, which was time-consuming and error-prone. Today, automated tools like AutoML optimize these parameters, enhancing model accuracy and efficiency.

Another innovation is reinforcement learning, where models learn by interacting with their environment. This approach has been particularly effective in robotics and game AI, enabling machines to make complex decisions in real-time.

“The evolution of training algorithms has been pivotal in shaping modern AI systems. From backpropagation to advanced optimization, these methods continue to push the boundaries of what machines can achieve.”

By leveraging these techniques, we’ve made significant strides in AI development. Whether it’s training language models or optimizing neural networks, modern methods ensure faster, more accurate results. As we continue to innovate, the future of AI training looks brighter than ever.

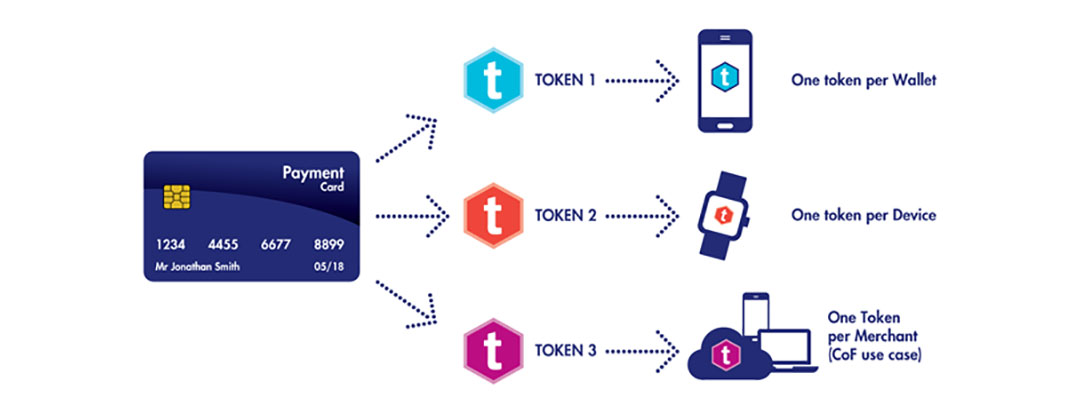

Real-world Deep Learning Workflows and Model Building

Building a deep learning model is a structured journey from raw data to actionable insights. Each step in this process plays a critical role in ensuring the model’s accuracy and efficiency. Let’s explore the key stages involved in creating and deploying a robust AI system.

Step-by-Step Process from Data Preprocessing to Deployment

The first step in any development project is data preprocessing. This involves cleaning and organizing raw data to ensure it’s suitable for training. Techniques like normalization and handling missing values are essential to maintain data quality.

Next, feature extraction transforms raw data into meaningful inputs for the model. This step is particularly important in language processing, where text data is converted into numerical formats like word embeddings. Proper feature extraction enhances the model’s ability to identify patterns.

Once the data is ready, the model building phase begins. This involves selecting the right architecture, such as convolutional or recurrent neural networks, based on the task at hand. The model is then trained using algorithms like backpropagation to minimize errors.

After training, the model undergoes evaluation to assess its performance. Metrics like accuracy, precision, and recall provide insights into how well the model generalizes to new data. This step ensures the model is ready for real-world applications.

Finally, deployment involves integrating the model into existing systems. Continuous monitoring is crucial to track performance and make necessary adjustments. This ensures the model remains effective over time.

Key Stages in Deep Learning Workflow

| Stage |

Description |

| Data Preprocessing |

Cleaning and organizing raw data for training. |

| Feature Extraction |

Transforming data into meaningful inputs. |

| Model Building |

Selecting and training the right architecture. |

| Evaluation |

Assessing model performance using metrics. |

| Deployment |

Integrating the model into real-world systems. |

By following this structured process, we can build models that deliver reliable and accurate results. Whether it’s for language processing or image recognition, a well-defined workflow is the foundation of successful AI development.

Deep Learning in the Context of Artificial Intelligence and Industry 4.0

Industry 4.0 is reshaping the industrial landscape, and deep learning is at its core. This advanced technology is driving the development of smart systems, enabling automation, and enhancing efficiency across sectors. From manufacturing to logistics, its applications are transforming how industries operate.

At the heart of Industry 4.0 lies the ability to process vast amounts of data in real-time. Deep learning excels in this area, providing the tools needed for predictive maintenance, quality control, and process optimization. Its ability to analyze complex patterns makes it indispensable for modern automation.

Role in Modern Automation and Smart Systems

Deep learning plays a pivotal role in powering automation. By integrating with IoT devices, it enables smart systems to monitor and control processes with minimal human intervention. For example, in manufacturing, it can predict equipment failures before they occur, reducing downtime and saving costs.

Another key application is in detection systems. Whether it’s identifying defects in products or monitoring security threats, deep learning algorithms provide accurate and timely insights. This capability is crucial for maintaining high standards in production and safety.

Here’s how deep learning adds value to Industry 4.0:

- Predictive Maintenance: Reduces downtime by forecasting equipment failures.

- Quality Control: Detects defects in real-time, ensuring product consistency.

- Process Optimization: Enhances efficiency by analyzing and improving workflows.

The intersection of traditional AI techniques with emerging industrial technologies is creating new opportunities. For instance, combining deep learning with robotics has led to the development of autonomous systems that can perform complex tasks with precision.

“The integration of deep learning into Industry 4.0 is not just an advancement—it’s a revolution in how we approach automation and efficiency.”

As industries continue to evolve, the role of deep learning will only grow. Its ability to adapt and learn from data ensures that it remains at the forefront of innovation. From smart factories to intelligent supply chains, the possibilities are endless.

Conclusion

The journey of neural networks has reshaped industries and redefined innovation. From early breakthroughs like backpropagation to modern architectures, these systems have evolved to tackle complex challenges. Their ability to process data through a hidden layer structure has unlocked unprecedented potential.

Today, neural computing drives advancements in business, research, and industry. Whether it’s enhancing healthcare diagnostics or optimizing supply chains, the impact is transformative. The blend of historical insights and cutting-edge techniques continues to push boundaries.

We encourage you to explore tools like DeepSeek Chat to experience this type of innovation firsthand. As the field evolves, continuous learning and adaptation remain essential. The future of neural computing is bright, and its possibilities are limitless.

FAQ

What is the difference between deep learning and traditional machine learning?

Deep learning uses layered neural networks to automatically learn features from data, while traditional machine learning often requires manual feature extraction. This makes deep learning more powerful for complex tasks like image and speech recognition.

How do convolutional neural networks (CNNs) work?

CNNs are designed to process grid-like data, such as images. They use convolutional layers to detect patterns like edges and textures, pooling layers to reduce dimensionality, and fully connected layers for final predictions.

What are the key applications of recurrent neural networks (RNNs)?

RNNs excel in handling sequential data, making them ideal for tasks like language translation, speech recognition, and time series forecasting. Their ability to remember previous inputs helps in understanding context.

Why are transformers important in natural language processing?

Transformers revolutionized NLP by introducing attention mechanisms, which allow models to focus on relevant parts of input data. This has led to breakthroughs in tasks like text generation, summarization, and question answering.

What hardware is essential for deep learning?

GPUs are critical for deep learning due to their ability to handle parallel computations. High-performance CPUs, TPUs, and large memory capacities also play a role in training complex models efficiently.

How does backpropagation optimize neural networks?

Backpropagation adjusts the weights of a neural network by calculating the gradient of the loss function. This process minimizes errors and improves the model’s accuracy over time.

What industries benefit most from deep learning?

Healthcare, finance, cybersecurity, and autonomous driving are among the top industries leveraging deep learning for tasks like disease detection, fraud prevention, threat analysis, and self-driving technology.

What challenges exist in training deep learning models?

Common challenges include overfitting, vanishing gradients, and the need for large datasets. Techniques like regularization, dropout, and data augmentation help address these issues.

How do generative models like GANs and VAEs work?

GANs (Generative Adversarial Networks) use two competing networks—a generator and a discriminator—to create realistic data. VAEs (Variational Autoencoders) encode data into a latent space and decode it to generate new samples.

What role does deep learning play in Industry 4.0?

Deep learning drives automation, predictive maintenance, and quality control in Industry 4.0. It enables smart systems to analyze vast amounts of data and make real-time decisions, enhancing efficiency and productivity.