What if the way AI learns and processes information could be fundamentally transformed? That’s precisely the ambition behind NVIDIA’s latest breakthrough: the Nemotron-CC dataset and its innovative curation pipeline. The promise of a trillion-token dataset raises important questions about the future of large language models (LLMs) and how they can be trained more effectively.

The Rise of Large Language Models

In recent years, large language models have become an essential part of various applications, from chatbots to content generators. These models rely on vast amounts of high-quality data to learn language patterns, context, and understanding. However, generating and curating this data effectively has become a challenge. Traditional methods often result in losing valuable information during data filtering processes, limiting the potential of these models.

The Need for Enhanced Data Quality

To address the challenges faced in LLM training, NVIDIA has introduced Nemotron-CC. This innovative approach aims to significantly improve data quality and quantity, which are crucial for achieving superior AI model performance. By leveraging a large corpus of tokens, NVIDIA hopes to enhance accuracy and capabilities across various applications of LLMs.

Introducing Nemotron-CC

NVIDIA’s unveiling of Nemotron-CC marks a turning point in the field of artificial intelligence. By integrating this dataset into their NeMo Curator, NVIDIA aims to enhance how data is curated for large language models.

What Makes Nemotron-CC Unique?

Nemotron-CC is not just another dataset; it encompasses a staggering trillion-token dataset tailored specifically for LLM training. Drawing from a colossal 6.3-trillion-token English language collection sourced from Common Crawl, Nemotron-CC promises to revolutionize the training methods used by AI developers.

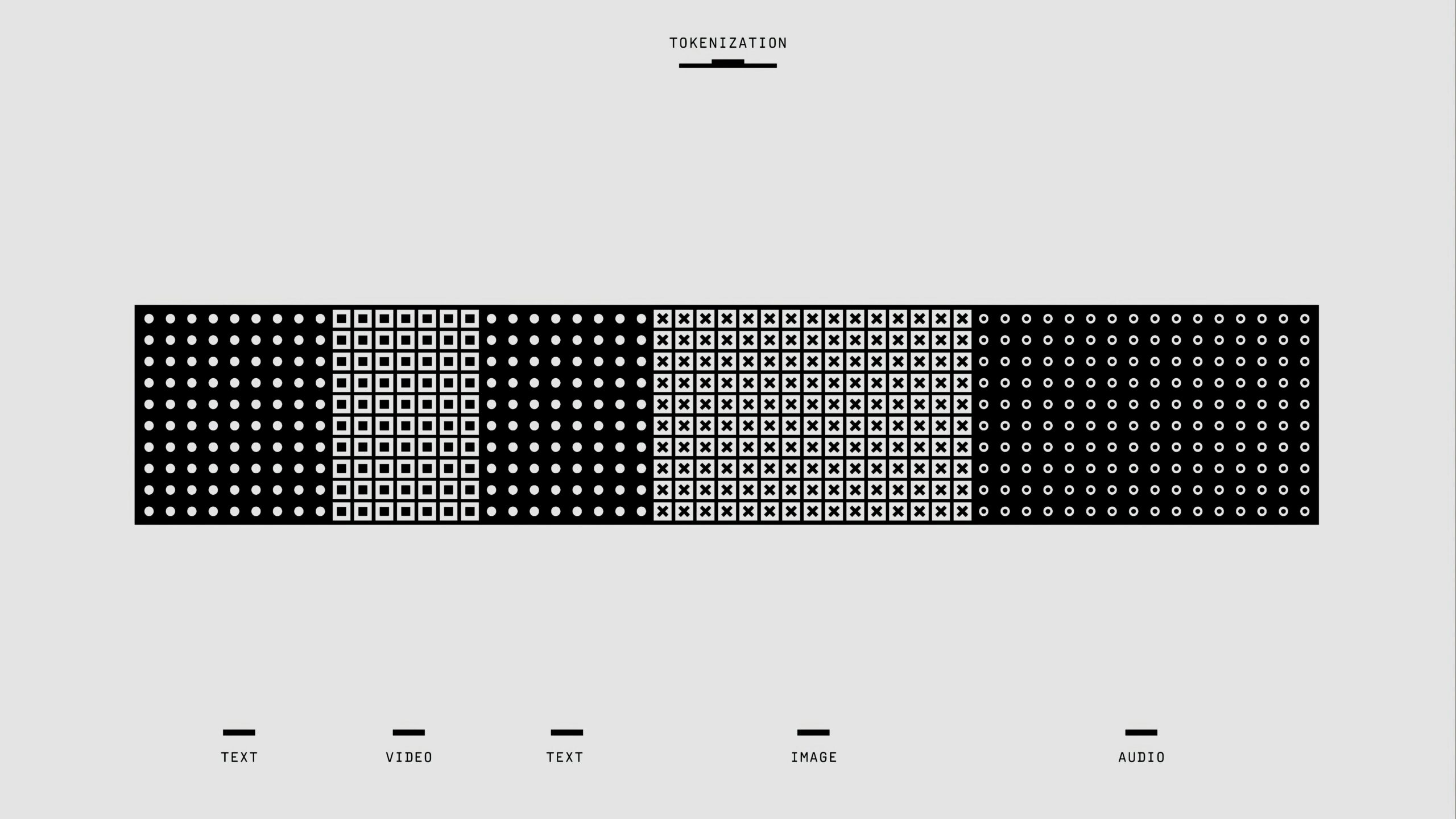

Understanding the Pipeline – Enhancements in Data Curation

The Nemotron-CC pipeline optimizes traditional data curation methods, addressing the limitations that have plagued model training. One of the significant issues with old methods is heuristic filtering, which often results in the exclusion of potentially useful data.

Classifier Ensembling and Synthetic Data Generation

Utilizing advancements in artificial intelligence, the Nemotron-CC pipeline employs classifier ensembling and synthetic data rephrasing. By doing so, it generates an impressive 2 trillion tokens of high-quality synthetic data. This capability enables the recovery of up to 90% of content that would have otherwise been discarded during typical filtering processes.

The Steps to Curate Quality Data

The actual process of data curation within the Nemotron-CC pipeline incorporates several sophisticated steps.

-

HTML-to-Text Extraction: The pipeline starts with extracting data from HTML, ensuring a clean and usable format.

-

Deduplication: To prevent redundancy, the process employs deduplication techniques, efficiently removing repeated information.

-

Heuristic Filters: A series of 28 heuristic filters are applied to refine the data further, ensuring that only quality content remains for further usage.

-

PerplexityFilter Module: This module helps in further refining the data, enhancing the quality of what is finally retained.

-

Quality Labeling: An ensemble of classifiers evaluates and categorizes documents based on predefined quality levels. This targeted approach enables the efficient generation of synthetic data.

-

Diverse Output Generation: The result is a diverse range of generated QA pairs, distilled content, and organized knowledge lists. These elements are instrumental in training models more effectively.

The Impact of Nemotron-CC on LLM Training

With such extensive resources available through Nemotron-CC, the implications for LLM training are significant.

Improved Model Performance

Models trained using the Nemotron-CC dataset have exhibited substantial performance improvements. For instance, a Llama 3.1 model trained on just a 1 trillion-token subset of this dataset achieved a remarkable increase of 5.6 points in the MMLU score compared to those trained on more conventional datasets.

Long Horizon Tokens

Even further, models trained on long-horizon tokens within Nemotron-CC demonstrate an additional 5-point boost in various benchmark scores. These improvements signify a notable advancement in the effectiveness of LLM training methodologies.

Practical Applications for Developers

For developers eager to harness the power of Nemotron-CC, NVIDIA has ensured that the pipeline is accessible and user-friendly. Available for those aiming to pretrain foundation models or execute domain-adaptive pretraining across different fields, the Nemotron-CC pipeline comes equipped with comprehensive tutorials and APIs.

Customization Options

This user-friendly design allows developers to customize the pipeline according to their specific requirements, making it easier to incorporate these advanced training techniques into their projects.

Conclusion: The Future of LLM Training

As NVIDIA pioneers new methods for training large language models through Nemotron-CC, the possibilities for AI development seem boundless. This groundbreaking dataset signals a shift towards improved data quality and expansive training capacities.

Addressing Future Challenges

It is essential to remain mindful of the challenges that may arise in the adoption and implementation of Nemotron-CC and similar technologies. While the dataset promises enhancements, users must still navigate the potential complexities associated with its application.

Ongoing Innovation in AI

Through the introduction of Nemotron-CC, NVIDIA is not just addressing current challenges but also paving the way for future innovations in AI. As they continue to refine these tools, AI developers and researchers will likely find new avenues to unlock the potential of large language models.

In conclusion, the emergence of the Nemotron-CC pipeline signifies a new chapter for large language models. By optimizing data curatorial practices and leveraging unprecedented amounts of information, it holds the potential to reshape the landscape of AI, creating smarter and more versatile models that can better understand and respond to human language. As this technology continues to unfold, AI applications across various domains stand to gain tremendously from NVIDIA’s vision.

Source: https://Blockchain.News/news/nvidia-unveils-nemotron-cc-trillion-token-dataset